OPENCUE INTEGRATION AT DUPP

may 8, 2020

Note: This is a text about software for render management. Enjoy ;)

january 6, 2021

Note: Added some information on local RQD override values. Needed after updating to v0.4.95

october 15, 2021

Note: Added some information migrating database after updating to v0.14.5

january 23, 2024

Note: Added some information updating to v0.22.14

INTRODUCTION

Well... changing your render management solution isn't easy as pie. And you don't want to make the wrong choice that you regret after tons of hours of integration work. But "luckely" (for us) our previous system developer went out of business and we had to come to a decision on this. Don't want to name our previous system but it filled almost all our needs and we integrated our softwares in to it. But the user interface were lagish and we experienced both server and client side crashes. Also sorting and filtering of jobs weren't very inuitive.

WHAT'S OUR NEEDS?

* a stable system that utilize as much of the cores as possible.

* able to integrate in to our main tools for rendering, transcoding and publishing.

* windows, unix and mac

* sending notifications to artist (e-mail and Slack)

* dependensies to connect series of events.

* search, filtering and manage running jobs

* stability!

* strong community!

WHY WE CHOOSE OPENCUE

A year ago my first choice would be Pixar's Tractor. I know it has everything we're looking for and I have years of experience with it. Also Deadline seams to have a nice community and AWS integration. I have only scrapt the surface of Deadline helping students with it and for me it looks the same as our previous solution. And then we have Rush, good old Rush, many peoples favorite, but haven't seen a new release in years and the interface is from the late nineties. We are really excited about Academy Software Foundation and how this will shape the VFX industry the years to come. At the moment OpenCue doesn't have all the needed functionality. The main feature i'm missing at the moment is to control license management more precisely (eg. if you have 10 Nuke render licenses but would like to run 5 instances on each node, that's 50 Nuke instances that should only use 10 licenses). You can't control this at the moment, it's on the road map and there are workarounds. One other thing is that Python 2.7 integration can be problematic. At least from my experience on Windows. That has to do with both gRPC and PySide2 libraries. So if you are using software on Python 2.7 platform you need to build a wrapper for sending jobs to a Python 3.7 environment. Sorry for babbling away from the subject.... why we choose OpenCue?

1. It's free, it's OpenSource.

2. Very scalable

3. Strong contributors: SPI, Google, Microsoft

4. Being an early adopter is a great challenge :)

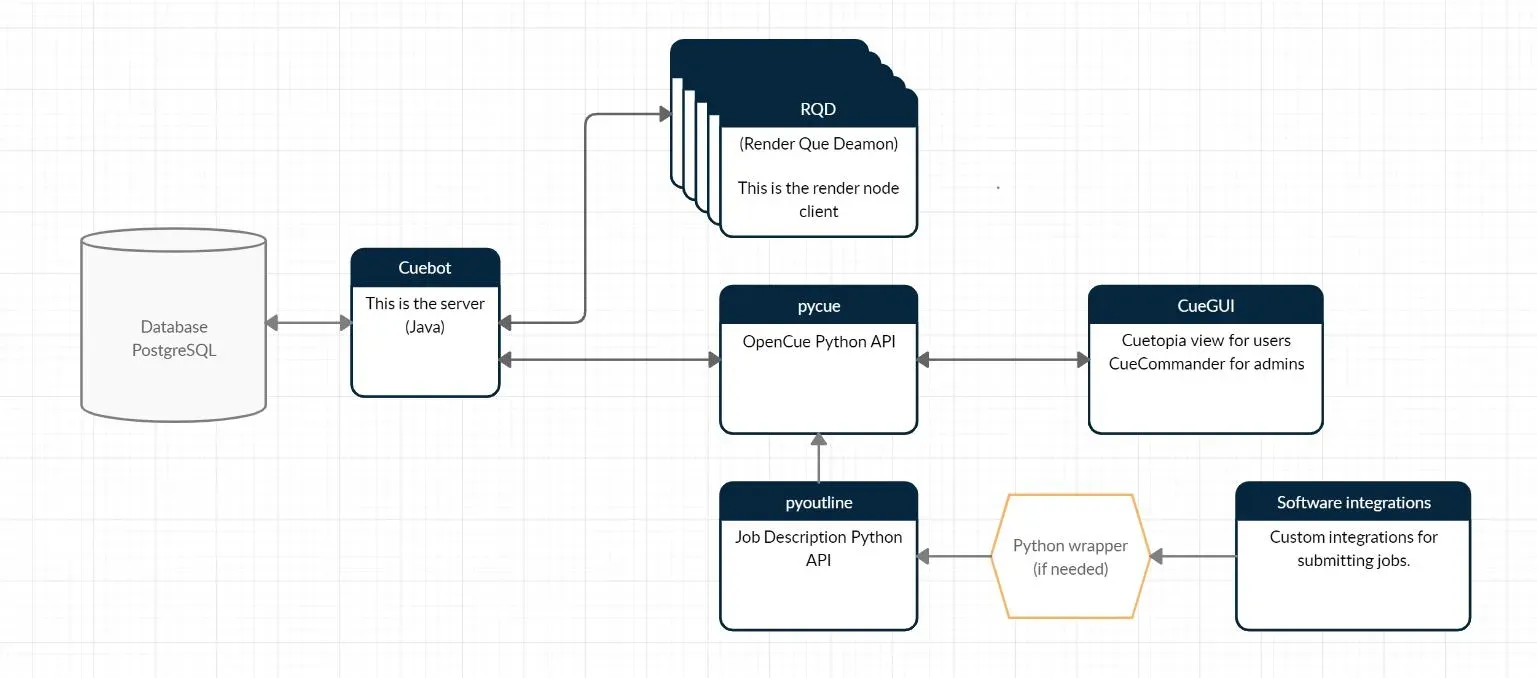

THE OPENCUE ARCHITECTURE

INSTALLATION

I will not get in to this in detail. There's a great guide at opencue.io/docs/getting-started. And for windows users the VFX Pipeline channel on Youtube has three videos on how to get started (youtube.com/watch?v=Vk-huejruG0).

Once everything is up and running you can try submitting jobs using CueSubmit. It supports jobs like Shell, Maya, Nuke and Blender by default. Also it's a good idea to get familiar to CueAdmin. With CueAdmin you can create shows and a lot of other stuff. check

cueadmin -server %OPENCUE_SERVER% -h

UNDERSTANDING OPENCUE

You can't delete jobs! All jobs will be stored in the database forever and ever. I did get over this quite fast. But quite frustrating when submitting hundreds of jobs when developing. "Eat dead frames" what's this? When a frame fails (the process exits with an error code) it will appear as DEAD in OpenCue. The job will be visible until you either retry, eat or kill it.

- Retry - stops rendering and retries the frame on another proc.

- Eat - stops rendering and doesn’t try to continue processing the frame.

- Kill - stops rendering and books to another proc.

What is booking? You can look at cuebot (the server) as a scheduler. If you turn booking off, the frame/layer/job will not be scheduled. A "proc" is a "portion of cores or memory". Eg if you want a Nuke chunk to book 4 cores or 2gb of ram it will book that space until the chunk is done.

You cant submit a job if you don't have a show registered. So before submitting the first job on a show you need to register the show. You can do this with cueadmin:

cueadmin -server %OPENCUE_SERVER% -force -create-show NAME

What is allocation? It's a way of grouping hosts. A allocation combines a facility with a tag. For example local.general. And what's a subscription? This is like a rule to a show. At what allocations this show can render. Services are a group of default values to a layer (tags, cores and memory). Limits are a way of giving a layer a maximum number of frames to run at the same time. I'm currently missing functionality of licence limitation mentioned above.

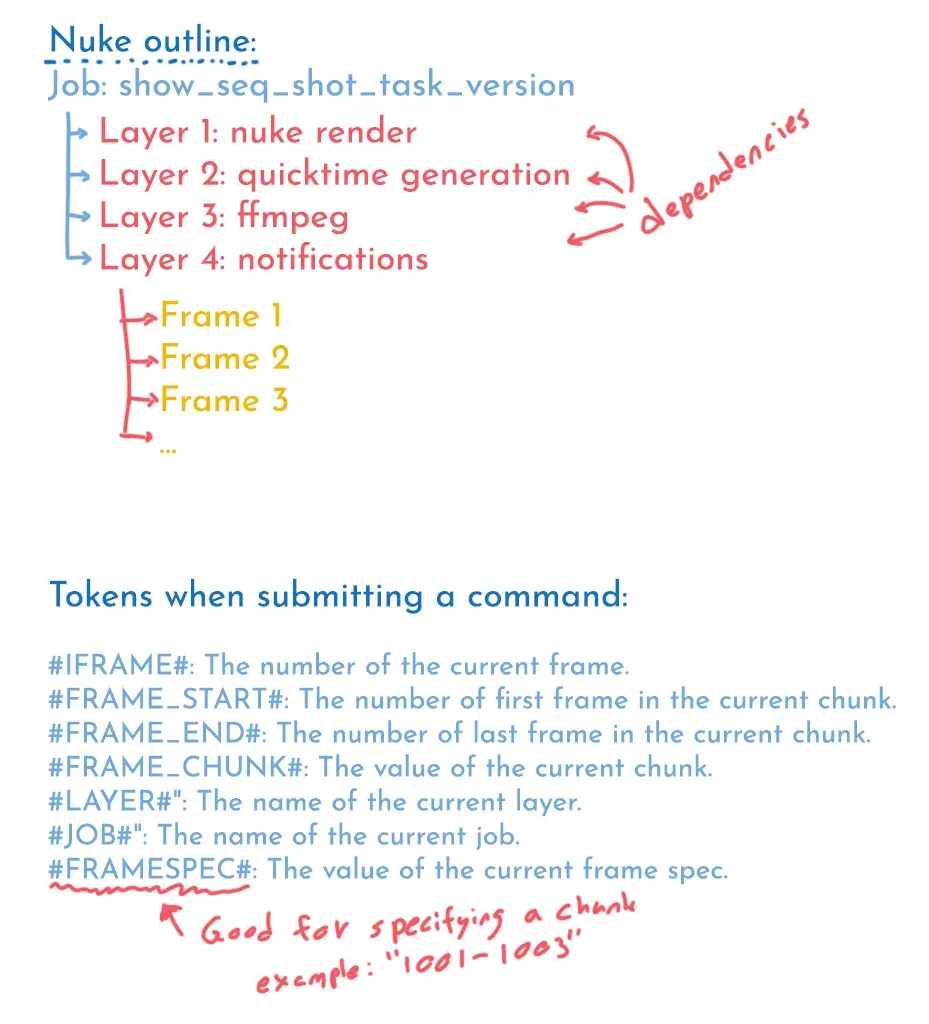

JOBS - PYOUTLINE

Lets look at some code. I'll share our Nuke integration below. And because we are using Nuke on a Python2.7 platform I send all needed data to a Python 3.7 virtual environment before the job is submitted.

Simplified case submitting one job with one layer:

def buildLayer(layerData, command, lastLayer=None):

if float(layerData.cores) >= 2:

threadable = True

else:

threadable = False

layer = Shell(layerData.name, command=command.split(), chunk=layerData.chunk,

threads=float(layerData.cores), range=str(layerData.layerRange),

threadable=threadable)

if layerData.services:

layer.set_service(layerData.services[0])

if layerData.limits:

layer.set_limits(layerData.limits)

if layerData.dependType and lastLayer:

if layerData.dependType == 'Layer':

layer.depend_all(lastLayer)

else:

layer.depend_on(lastLayer)

return layer

def buildNukeLayer(layerData, lastLayer):

nukeCmd = buildNukeCmd(layerData)

return buildLayer(layerData, nukeCmd, lastLayer)

def buildNukeCmd(layerData):

writeNodes = layerData.cmd.get('writeNodes')

nukeFile = layerData.cmd.get('nukeFile')

cmd = 'Nuke11.2.exe -F #FRAMESPEC# '

cmd += '-X {} '.format(writeNodes)

cmd += '-x {}'.format(nukeFile)

return renderCommand

NUKE_LAYER_DATA = {

'name': seqName,

'layerType': JobTypes.JobTypes.NUKE,

'cmd': {'writeNodes': nodes, 'nukeFile': file},

'layerRange': fstart+'-'+fend,

'cores': '4',

'chunk': '1',

'limits': ['nuke_lic'],

'services': ['nuke']

}

jobData = {

"name": job,

"shot": shotName,

"show": projectName,

"username": usr,

"layers": [Layer.LayerData.buildFactory(**NUKE_LAYER_DATA)]

}

outline = Outline(jobData['name'], shot=jobData['shot'], show=jobData['show'], user=jobData['username'])

jobs = cuerun.launch(outline, use_pycuerun=False)

for job in jobs:

job.setPriority(5)

job.setMaxCores(1000)

job.setMaxRetries(3)

THIS IS OUR SET-UP:

nukeDispatcher.py

cmd = 'start cmd /K "'

cmd += 'echo Submitting to OpenCue & '

cmd += 'cd /d [PATH TO OPENCUE VIRTUAL ENVIRONMENT]\\Scripts & activate & '

cmd += 'set PYTHONPATH=%PYTHONPATH%;[adding some local libraries]& '

cmd += 'python [PATH TO SCRIPT]\\nukeCueWrapper.py \

--file '+comp_dirpath+' \ #path to nuke script

--nodes "'+nodelist+'" \ #write nodes to render

--fstart '+startFrame+' \

--fend '+endFrame+' \

--fps '+framerate+' \

--ingrid '+folderId+' \ #This is shot id from our asset manager

--proj "'+projectNameNice+'" \ #name of show

--shot "'+shotName+'" \ #name of shot (sq010_sh010)

--job "'+jobName+'" \ #name of task and version (compositing_v001)

--usr "'+str(userName)+'" \

--seq "'+input+'" \ #this is the path of a selected read nod on publish

--mov "'+output+'" \ #where the quicktime should be generated

--cs "'+colorspace+'" \

--lut "'+folderLut+'" \ #if shot lut scould be applied to quicktime

--qc "'+masterReadFile+'" \ #auto generate quicktime for quality check with main plate

--type "publish"' #what type of job is this, render or publish

pipe = subprocess.call(cmd, stdin=PIPE, stderr=PIPE, stdout=PIPE, shell=True)nukeCueWrapper.py

This script is mainly based on cuesubmit/tests/Submission_tests.py so that might be a good starting point.

from __future__ import print_function

from __future__ import division

from __future__ import absolute_import

import os

import subprocess

from datetime import date

import argparse

from builtins import str

from outline import Outline, cuerun

from outline.modules.shell import Shell

from cuesubmit import Constants

from cuesubmit import JobTypes

from cuesubmit import Layer

import pyDupp #local stuff, not needed for opencue

import ingrid #local stuff, not needed for opencue

PYTHON_BIN = '' #path to python

os.environ['CUEBOT_HOSTS'] = '192.168.40.101'

def buildLayer(layerData, command, lastLayer=None):

if float(layerData.cores) >= 2:

threadable = True

else:

threadable = False

layer = Shell(layerData.name, command=command.split(), chunk=layerData.chunk,

threads=float(layerData.cores), range=str(layerData.layerRange),

threadable=threadable)

if layerData.services:

layer.set_service(layerData.services[0])

if layerData.limits:

layer.set_limits(layerData.limits)

if layerData.dependType and lastLayer:

if layerData.dependType == 'Layer':

layer.depend_all(lastLayer)

else:

layer.depend_on(lastLayer)

return layer

def buildNukeLayer(layerData, lastLayer):

nukeCmd = buildNukeCmd(layerData)

return buildLayer(layerData, nukeCmd, lastLayer)

def buildQtLayer(layerData, lastLayer):

qtCmd = buildQtCmd(layerData)

return buildLayer(layerData, qtCmd, lastLayer)

def buildNotifyLayer(layerData, lastLayer):

notifyCmd = buildNotifyCmd(layerData)

return buildLayer(layerData, notifyCmd, lastLayer)

def buildFfmpegLayer(layerData, lastLayer):

ffmpegCmd = buildFfmpegCmd(layerData)

return buildLayer(layerData, ffmpegCmd, lastLayer)

def buildNukeCmd(layerData):

"""From a layer, build a Nuke Render command."""

writeNodes = layerData.cmd.get('writeNodes')

nukeFile = layerData.cmd.get('nukeFile')

if not nukeFile:

raise ValueError('No Nuke file provided. Cannot submit job.')

renderCommand = 'set NUKE_PATH=& ' #your nuke path here

renderCommand+= 'set foundry_LICENSE=4101@dupp01& '

renderCommand+= '"C:/Program Files/Nuke11.2v3/Nuke11.2.exe" -F #FRAMESPEC# '

if writeNodes:

renderCommand += '-X {} '.format(writeNodes)

renderCommand += '-x {}'.format(nukeFile)

return renderCommand

def buildQtCmd(layerData):

#Using nuke to render quicktimes with burn-ins. This is very facility specific so no big point of sharing this. Please reach out if you want to discuss.

return renderCommand

def buildNotifyCmd(layerData):

#Sending notification to artist, supervisors. We are using slack for all notifications.

return notifyCommand

def buildFfmpegCmd(layerData):

output = layerData.cmd.get('mov').replace('.mov','.mp4')

ffmpegCommand = 'l:\\bin\\ffmpeg\\ffmpeg.exe -i '+layerData.cmd.get('mov')+' -y -q:v 0 '+output+''

return ffmpegCommand

def cuePublish(file, nodes, fstart, fend, fps, ingrid, proj, shot, job, usr, seq, mov, cs, lut, qc, type):

if type=='render':

NUKE_LAYER_DATA = {

'name': seqName,

'layerType': JobTypes.JobTypes.NUKE,

'cmd': {'writeNodes': nodes, 'nukeFile': file},

'layerRange': fstart+'-'+fend,

'cores': '4',

'chunk': '1',

'limits': ['nuke_lic'],

'services': ['nuke']

}

elif type=='rendermov':

NUKE_LAYER_DATA = {

'name': seqName,

'layerType': JobTypes.JobTypes.NUKE,

'cmd': {'writeNodes': nodes, 'nukeFile': file},

'layerRange': fstart+'-'+fend,

'cores': '4',

'chunk': str(int(fend)-int(fstart)+1), #all frames on one node if .mov

'limits': ['nuke_lic'],

'services': ['nuke']

}

QT_LAYER_DATA = {

'name': movName+'_qt',

'layerType': JobTypes.JobTypes.SHELL,

'cmd': {'fstart': fstart, 'fend': fend, 'fps': fps, 'ingrid': ingrid, 'usr': usr, 'seq': seq, 'mov': mov, 'cs': cs, 'lut': lut, 'qc': qc, 'type': type},

'layerRange': fstart,

'cores': '0',

'limits': ['nuke_lic'],

'services': ['nuke'],

'dependType': Layer.DependType.Layer

}

FFMPEG_LAYER_DATA = {

'name': movName.replace('.mov','.mp4')+'_ffmpeg',

'layerType': 'ffmpeg',

'cmd': {'mov': mov},

'layerRange': fstart,

'cores': '0',

'limits': '',

'services': '',

'dependType': Layer.DependType.Layer

}

NOTIFICATION_LAYER_DATA = {

'name': 'notify_on_slack',

'layerType': 'notify',

'cmd': {'fstart': fstart, 'fend': fend, 'project': projectName, 'shot': shotName, 'usr': usr, 'job': job, 'seq': seq, 'mov': mov, 'cs': cs, 'lut': lut, 'type': type},

'layerRange': fstart,

'cores': '0',

'limits': '',

'services': '',

'dependType': Layer.DependType.Layer

}

if type=='publish':

jobData = {

"name": job,

"shot": shotName,

"show": projectName,

"username": usr,

"layers": [Layer.LayerData.buildFactory(**QT_LAYER_DATA),

Layer.LayerData.buildFactory(**FFMPEG_LAYER_DATA),

Layer.LayerData.buildFactory(**NOTIFICATION_LAYER_DATA)]

}

elif type=='render' or type=='rendermov': #This is a render

jobData = {

"name": job,

"shot": shotName,

"show": projectName,

"username": usr,

"layers": [Layer.LayerData.buildFactory(**NUKE_LAYER_DATA),

Layer.LayerData.buildFactory(**QT_LAYER_DATA),

Layer.LayerData.buildFactory(**NOTIFICATION_LAYER_DATA)]

}

else:

print('Unknown job type.')

print('.')

outline = Outline(jobData['name'], shot=jobData['shot'], show=jobData['show'], user=jobData['username'])

print('..')

lastLayer = None

for layerData in jobData['layers']:

if layerData.layerType == JobTypes.JobTypes.MAYA:

layer = buildMayaLayer(layerData, lastLayer)

elif layerData.layerType == JobTypes.JobTypes.SHELL:

layer = buildQtLayer(layerData, lastLayer)

elif layerData.layerType == JobTypes.JobTypes.NUKE:

layer = buildNukeLayer(layerData, lastLayer)

elif layerData.layerType == 'notify':

layer = buildNotifyLayer(layerData, lastLayer)

elif layerData.layerType == 'ffmpeg':

layer = buildFfmpegLayer(layerData, lastLayer)

else:

raise ValueError('unrecognized layer type %s' % layerData.layerType)

outline.add_layer(layer)

lastLayer = layer

if 'facility' in jobData:

outline.set_facility(jobData['facility'])

print('...')

jobs = cuerun.launch(outline, use_pycuerun=False)

for job in jobs:

job.setPriority(5)

job.setMaxCores(1000)

job.setMaxRetries(3)

#job.addComment('Subject','Comment')

print('....\nJob \"'+job.name()+'\" submitted!')

print('(you can close this window)\n')

def parseArgs():

parser = argparse.ArgumentParser()

parser.add_argument("--file", help="File path to Nuke scene file.")

parser.add_argument("--nodes", help="Write nodes sepperated by ,")

parser.add_argument("--fstart", help="Start frame.")

parser.add_argument("--fend", help="End frame.")

parser.add_argument("--fps", help="Framerate.")

parser.add_argument("--ingrid", help="Ingrid folder ID.")

parser.add_argument("--proj", help="Project name")

parser.add_argument("--shot", help="Shot name path")

parser.add_argument("--job", help="Name of job.")

parser.add_argument("--usr", help="Name of user.")

parser.add_argument("--seq", help="File path to output files")

parser.add_argument("--mov", help="File path to output quicktime")

parser.add_argument("--cs", help="Colorspace of output sequence.")

parser.add_argument("--lut", help="Apply LUT to quicktime. Enter LUT name")

parser.add_argument("--qc", help="Path to source file for QC use")

parser.add_argument("--type", help="Job type. render or publish")

return parser.parse_args()

if __name__ == '__main__':

args = parseArgs()

cuePublish(args.file, args.nodes, args.fstart, args.fend, args.fps, args.ingrid, args.proj, args.shot, args.job, args.usr, args.seq, args.mov, args.cs, args.lut, args.qc, args.type)

UPDATES

january 6, 2021

After updating to OpenQue v0.4.95 all hostnames were replaced by IP's (https://github.com/AcademySoftwareFoundation/OpenCue/pull/739). To fix this we need to specify a variable called RQD_USE_IP_AS_HOSTNAME in rqd.conf. On Windows this file should be placed here: %LOCALAPPDATA%\OpenCue\

If the folder doesn't exist, just create one named OpenCue and create an empty file called rqd.conf inside. Insert the following to rqd.conf:[Override]

RQD_USE_IP_AS_HOSTNAME=FALSE

You can also override number of cores by inserting OVERRIDE_CORES=32 (32 will show up as 16 in Cuetopia). How we are doing this is to copy a global rqd.conf to each host in a client startup script.

october 15, 2021

Needed to add some columns to the database to have v0.14.5 running.

Got this error: "ERROR: column host.int_gpus doesn't exist"

Download database migrations from github (https://github.com/AcademySoftwareFoundation/OpenCue/tree/c22fe12721bed24b814184ea78c5e447b3b6a477/cuebot/src/main/resources/conf/ddl/postgres/migrations)

And follow instructions at OpenCue website:

https://www.opencue.io/docs/other-guides/applying-database-migrations/

january 23, 2024

Migrating database to v0.22.14 from this link:

https://github.com/AcademySoftwareFoundation/OpenCue/tree/master/cuebot/src/main/resources/conf/ddl/postgres/migrations

Happy rendering!

CONTACT

Let me know your thoughts or reach out if you have any questions.

Best,

Anders